- Retail SEO Explained : The Ultimate Beginner’s Guide - June 17, 2025

- Keyword Research for SEO in 2025: The Complete Step-by-Step Guide - June 16, 2025

- How Do Search Engines Work? The Beginners Guide - June 2, 2025

Technical SEO matters until it doesn’t. If you want your pages to show up in search results at all, you certainly need them to be crawlable and indexable (at the very least). But, beyond that, so many technical adjustments will matter far less than quality content and quality backlinks!

That’s why we put this guide for you to understand what technical SEO really is to help you devote your time and effort where it really makes a difference.

Your content goes here. Edit or remove this text inline or in the module Content settings. You can also style every aspect of this content in the module Design settings and even apply custom CSS to this text in the module Advanced settings.

What is Technical SEO?

Technical SEO is optimizing your website so search engines can discover, crawl, interpret and index your content. Technical SEO is often just referred to as “onsite SEO,” and it can help improve your search visibility and increase your organic rankings by ensuring your site is compliant with technical search engine requirements.

What does it focus on?

✔ Ensures search engines access & understand your website

✔ Fix issues blocking your rankings (speed, indexation, mobile friendliness)

✔ Increase organic traffic without changing your content

Why Does it Matter? Without technical SEO, your great content may never rank! 🚀

Understanding crawling

Welcome back, learners! Today, we’re diving into the fascinating world of web crawling—the process search engines use to explore and catalog your website. Think of it like a librarian meticulously scanning every book in a library to add them to the catalog. Let’s break down how you can guide these digital librarians (aka crawlers) to work efficiently on your site.

How Crawling Works

Crawling is the backbone of search engines. Imagine a robot spider scuttling across the web, following links (like breadcrumb trails) to discover pages. When it finds your site, it “reads” your content and notes any new links to explore. But you can influence where these crawlers go. Let’s explore your options!

Robots.txt: The Traffic Director

A robots.txt file acts like a map for search engines, telling them which areas of your site are open for exploration and which are off-limits. Place this file in your website’s root directory (like the front desk of our library analogy).

✨ Did You Know?

Google might still index a page (list it in search results) even if it’s blocked in robots.txt—if other sites link to it! To fully hide a page, use methods like password protection or meta tags. Check this flowchart for a step-by-step guide!

Controlling Crawl Speed

Want to limit how often search engines visit? Many crawlers respect the crawl-delay directive in robots.txt. However, Google plays by its own rules. For Google, adjust crawl rates in Google Search Console under “Crawl Stats.” Think of it as setting visiting hours for a busy guest!

Restricting Access Like a Pro

Need to keep pages private for users but invisible to search engines? Use:

Login Systems: Like a members-only club.

Password Protection (HTTP Authentication): A digital “Do Not Enter” sign.

IP Whitelisting: Only specific “addresses” (IPs) can access the page.

Perfect for internal company hubs, subscription content, or test sites!

Tracking Crawler Activity

Curious what Google’s up to on your site? Head to Google Search Console’s “Crawl Stats” report. It’s like a visitor log showing how often Googlebot stops by, which pages it explores, and if it hits any roadblocks.

For a deeper dive, check your server logs (raw data of all site visits). Tools like AWstats or Webalizer (often in cPanel) can turn this data into insights—ideal for tech-savvy users!

Crawl Budget: Don’t Waste It!

Every site has a “crawl budget”—how often search engines deem it worth visiting. Popular, frequently updated pages (like a trending blog) get more attention. Older or rarely linked pages? They might gather dust.

Key Takeaways

Use

robots.txtto guide crawlers, but pair it with other tools (like meta tags) to block indexing.Adjust Google’s crawl rate in Search Console.

Restrict sensitive pages with logins or passwords—not just

robots.txt.Monitor crawl activity to spot issues early.

Ready to optimize your site’s crawlability? Keep experimenting, and remember: a well-crawled site is a visible site! 🚀

Understanding Indexing

Welcome back! Now that we’ve covered crawling, let’s talk about indexing—the process where search engines store and organize your pages so they can appear in search results. Think of it as filing a book into the right library shelf. If your page isn’t indexed, it’s invisible to searchers. Let’s learn how to stay visible!

Robots Directives: The “Do Not Disturb” Sign for Pages

Sometimes, you don’t want a page to appear in search results. Enter the robots meta tag, a tiny HTML snippet that tells search engines how to handle your page. Place it in the <head> section of your page, like this:

What it does: The

noindexdirective says, “Hey Google, crawl this page, but don’t add it to your index.”Use cases: Temporary pages, duplicate content, or private pages you don’t want publicly searchable.

⚠️ Pro Tip: noindex only works if the page isn’t blocked by robots.txt. If crawlers can’t access the page, they can’t see your noindex tag!

Canonicalization: Solving the Duplicate Content Puzzle

Imagine having 10 copies of the same book in a library—it’s confusing! Similarly, duplicate content (like multiple URLs showing the same product) confuses search engines. Google fixes this by picking one “canonical” URL to represent the page in its index.

How Google Chooses the Canonical URL

Google uses signals like:

Canonical tags: A tag in your HTML that says, “This is the main version!”

<link rel=”canonical” href=”https://yourdomain.com/main-page/” />

Internal links: Which version do you link to most often?

Sitemaps: URLs listed in your sitemap get priority.

Redirects: If Page A redirects to Page B, Google knows Page B is the star.

🔍 What if Google ignores your canonical tag? It happens! Google sometimes picks its own canonical based on user behavior or content freshness.

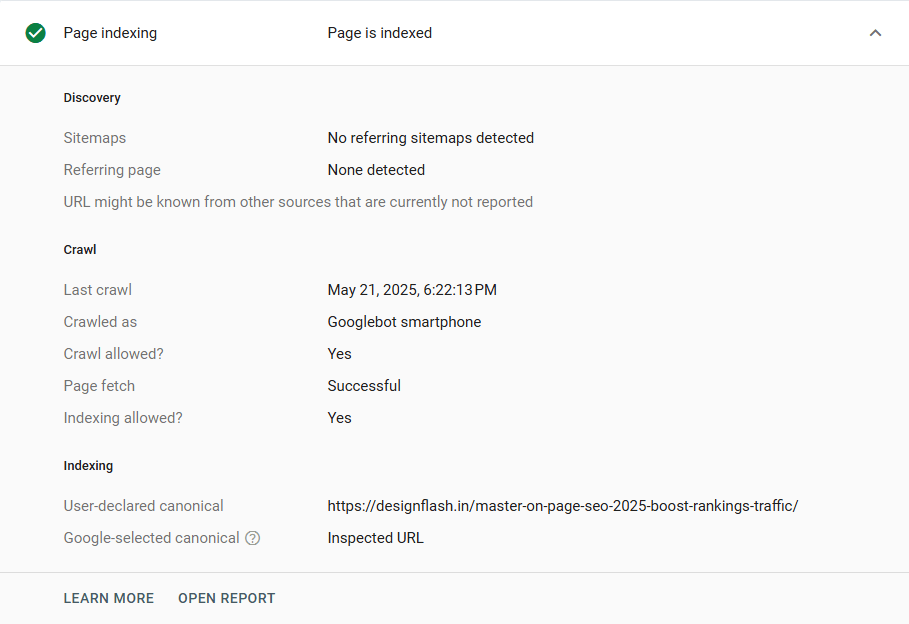

How to Check How Google Sees Your Page

- Want to know if your page is indexed—and which version Google chose as canonical? Use the URL Inspection Tool in Google Search Console:

- Enter your page’s URL.See if it’s marked as “Indexed.”Check the “Google-selected canonical” to confirm it matches your preferred version.

- This tool is like a backstage pass to your page’s search engine status!

Key Takeaways

Indexing = Visibility: No index? No search traffic.

Block with care: Use

noindexfor pages you want crawled but not indexed. For total privacy, use logins orrobots.txtwithnoindex.Canonicalize wisely: Guide Google to your preferred URL, but don’t panic if it picks another—focus on strong signals (tags, internal links).

Easy Technical SEO Fixes for Faster Results :

Want Google to find your pages? Don’t hide them with ‘noindex’ tags or block Googlebot in robots.txt. Create an XML sitemap (think treasure map for bots), link pages internally, use canonical tags to squash duplicate content, set up 301 redirects for dead URLs, and monitor indexing in Google Search Console. Be the tour guide Google needs—no invisibility cloaks allowed.

Reclaim Lost Links to Strengthen Your SEO

Over time, website URLs often change due to redesigns, content updates, or structural shifts. However, many of these old URLs may still have valuable backlinks pointing to them. If these outdated links aren’t redirected to your current pages, you’re essentially forfeiting the SEO equity those links provide. A simple 301 redirect can solve this, instantly transferring that value to your new pages. This process is one of the fastest ways to “build” links—without outreach or new content creation.

To identify these opportunities, use a tool like Ahrefs,Ubersuggest and Semrush.

- Enter your domain.

- Navigate to the “Best by Links” report.

- Apply the “404 not found” filter.

Sort results by “Referring Domains” to prioritize pages with the most backlinks.

By redirecting these broken URLs, you recover lost authority and improve your site’s rankings. It’s a quick win with lasting impact—don’t let outdated links go to waste.

Improve SEO with Internal Linking

Internal links, or links between your pages, are a critical factor for helping visitors and search engines to find and prioritize your content. Internal links allow you to point users to additional information that is related, meaning that you improve your navigation and you are telling the search engines which pages you think are important and if you use keywords in them, you are allowing those pages a chance to rank in SERP.

There are many factors that go into creating internal links, and using tools such as the Internal Link Opportunities within Site Audit, makes it easier to identify gaps in linking opportunities. The Internal Link Opportunities support scans your content for keywords that your site already ranks for and will suggest contextual linking opportunities to relevant internal pages of your site. For example, you have a guide about duplicate content and you mention “faceted navigation” in the guide. Site Audit detected that you have an internal page devoted to faceted navigation and flagged that keyword as an opportunity for linking internally. By linking the keywords in your duplicate content guide to your internal faceted navigation page, you are creating internal, contextual links.

By linking the keywords along with internal contextual links between pages that are relevant increases topical relevance, spreads page authority, and allows a user pathway toward more in-depth resources from a mini inference context.

By using a tool such as Internal Link Opportunities frequently will keep updating the internal link strategy as the content and keywords evolve over time, and it is an effortless way to create SEO impact. You can also prioritize the identified keyword opportunities to create a coherent structure and search friendly web site in which the visitor had a positive-value-added experience and your ranking positions improved.

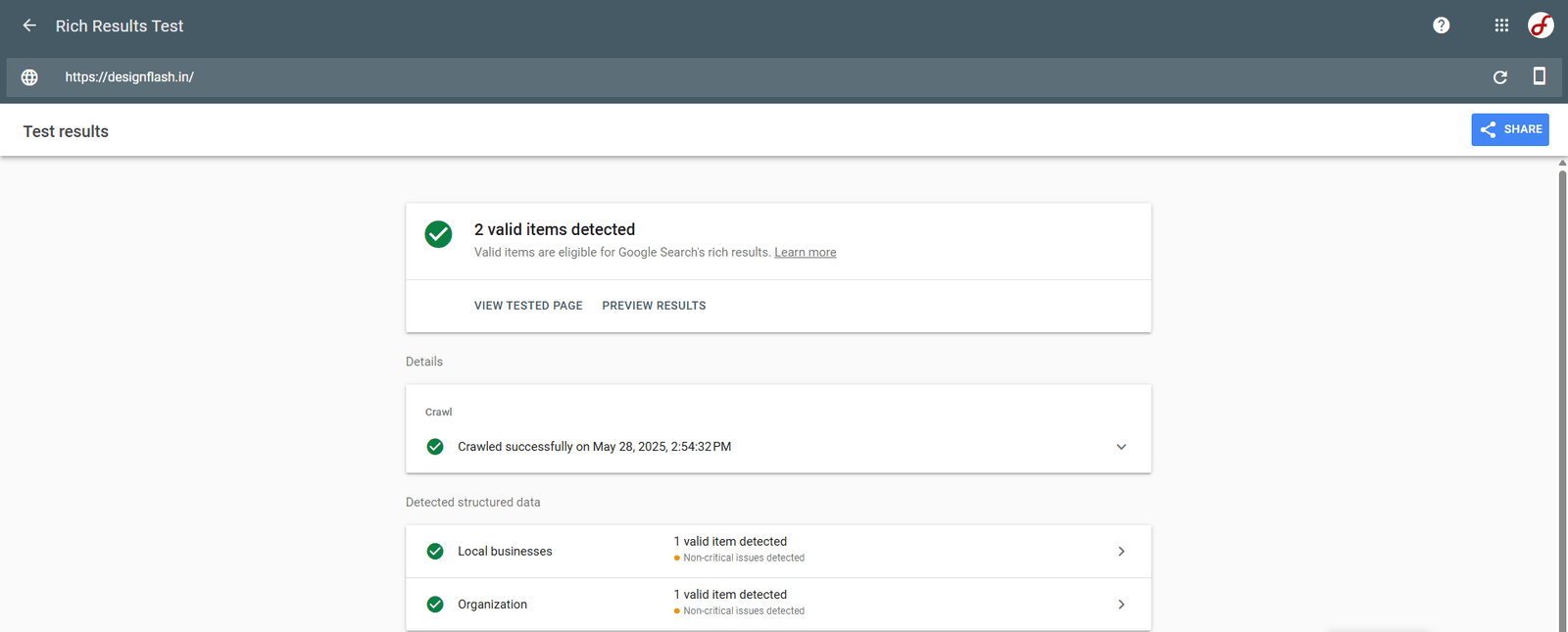

Add schema markup

Schema markup consists of a well-formatted data code that enhances the way search engines can interpret your content, leading to features such as rich snippets, FAQs, or event listings in search results. All of these enhancements make your listings look better, auto-reiterated visuals increase click through rates, and make your search result more appealing. The Search Gallery provides eligible search features for content such as recipes, products, or articles, and the types of schema markup required for these criteria. When you follow Google’s guidelines for writing schema markup, you increase your chance of getting prominent search displays on SERPs. Implementing schema helps clarify the context of the content that you are presenting, allowing search engines to accurately index or display your site. By utilizing schema markup for your site, you can increase the organic visibility of your site, making your content more discoverable amongst heavy competition.

Extra Technical SEO Enhancements

The projects listed above are useful but require longer bite and more effort than that of the former “quick wins.” Let me be clear, this is not a negative assessment, rather it is offering some direction about what to prioritize. Take care of the urgent, high impact stuff first, then be sure to weave these heavy lift projects into your project workflow. These are not some nice-to-haves; they are building the necessary scaffolding, the user experience, and they help support tackling the sustainability challenge even if we and your users do not reap the benefits now. And you need to strike a balance between quick wins and deeper investments to build a resilient, high performing site over time.

Signals of Page Experience:

Although only minor ranking factors, page experience signals (load time, mobile-friendliness, and visual stability) are focused on user satisfaction. Supporting those signals, along with more traditional aspects of web optimization for SEO, will help improve user engagement and reduce bounce rates, and create trust that will facilitate users returning for more. The benefits from your work and UI/UX improvements won’t be immediate, but reap long-term rewards by building a solid foundation for possible future success.

Core Web Vitals :

Core Web Vitals are Google’s metrics for user experience and they represent site speed and capabilities. Largest Contentful Paint (LCP) measures how long a webpage takes to visually load, Cumulative Layout Shift (CLS) measures how stable the layout is visually (path of least resistance!), and First Input Delay (FID) looks at how interactive the page is. Improving Core Web Vitals leads to better user satisfaction but also helps with SEO by supporting Google’s page experience ranking factors.

HTTPS:

HTTPS keeps the data secure as it travels between browsers and servers and prevents the data from being intercepted or tampered with in transit. It provides a right of confidentiality (privacy), a right of integrity (data has not been altered), and a right of authentication (correct individual, organization or system was communicating). Today, the default for modern websites is to always use HTTPS – often indicated by a lock icon in the address bar – instead of using unsecured HTTP when users communicate with that website. Ultimately, almost all web traffic relies on HTTPS these days to avail of secure communication.

Mobile Optimize:

Mobile-friendliness refers to websites that look nice and operate well on tablets and smartphones. To determine how well your site performs on mobile, sign in to Google Search Console and view the report on your website’s “Mobile Usability.” The report helps you know about any issues that will make it hard for visitors to use your website on mobile devices!

Interstitials:

Interstitials are pop-ups that take over portions of a webpage and block the main content. In many cases they require you to either close them or otherwise interact with them first before you can access the core content of the webpage. This can be irritating and detrimental to usability, especially on mobile devices. Utilizing too many pop-ups or interstitials can also harm your site or webpage rankings in search engines.

Website Maintenance & Health Check

These things probably won’t change your search ranking in a big way, but they’re still worthwhile things to do as they improve user experience, site usability, and keep everything running smoothly for users.

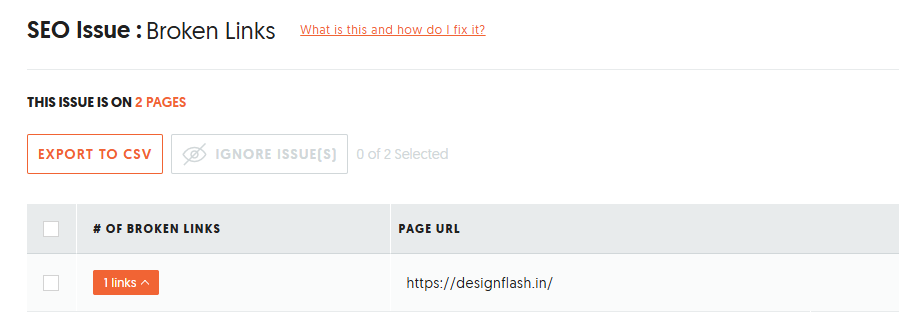

Broken Link :

Broken links are hyperlinks on your website that are associated with a missing page – be it internal (within your domain) or external (via other sites). Broken links can cause user frustration and can hurt credibility.

To effectively find broken links, use the Links report as part of Site Audit. This feature scans your entire website and identifies broken links that need attention. Then, you can quickly fix these issues and provide a smooth and reliable destination for your users.

Redirect Chains:

Redirect chains occur when multiple redirects link together before reaching the final destination URL. These chains can slow down page load times and create a poor user experience.

To identify redirect chains on your site, use the Redirects report in Site Audit. This tool helps you detect and streamline unnecessary redirects, improving site performance and ensuring visitors reach their desired content faster.

Technical SEO Tools for Website Optimization

Technical SEO can be tricky (which is why the right tools can make a world of difference). In this blog, we’ll take you through some of the best technical SEO tools to help you understand what each one does, how it helps, and why it’s important. Hopefully this guide will make you feel confident in optimizing your website’s performance, whether you’re learning for the first time or needing a quick refresher.

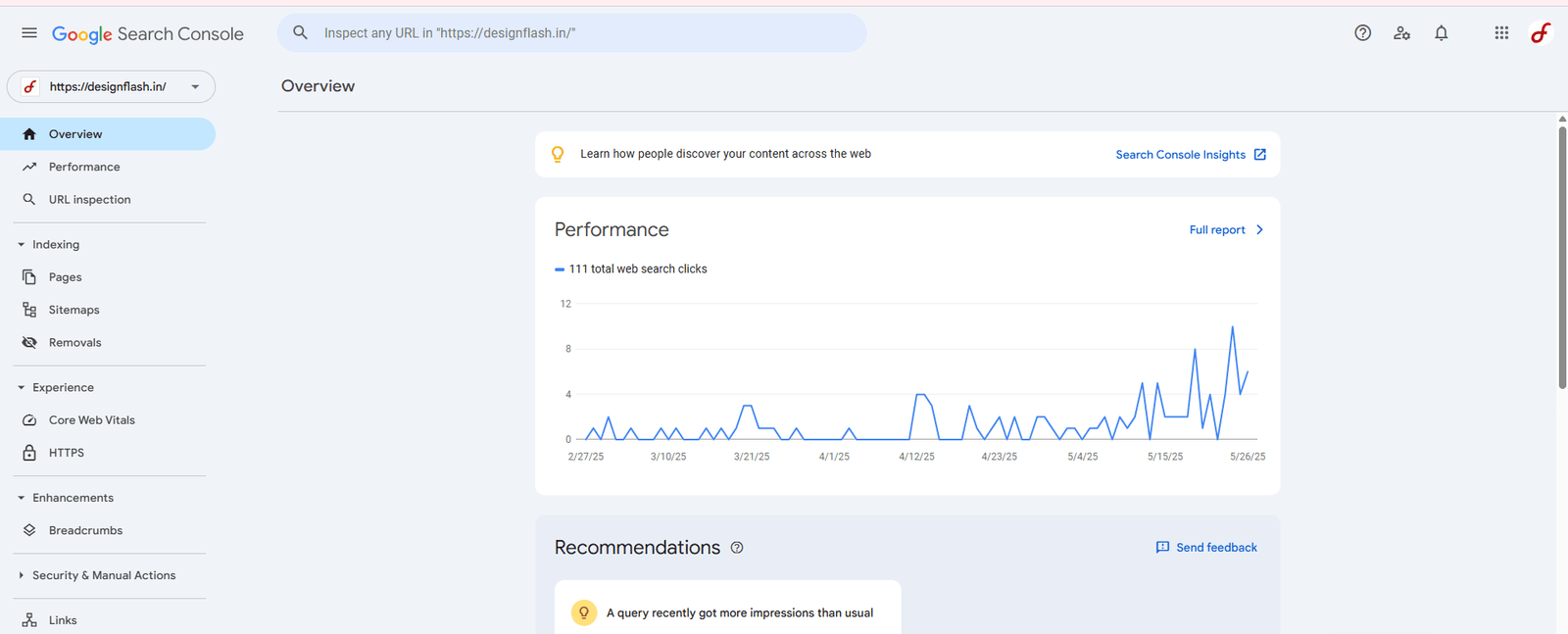

Google Search Console

Consider Google Search Console (GSC) your key insight into what Google thinks of your site. This free tool (previously called Google Webmaster Tools) lets you understand and diagnose how your site appears in Google Search results. You can find and fix technical issues (e.g., crawl errors), provide sitemaps, find structured data errors, and view your search traffic data from Google itself.

Other search engines, like Bing and Yandex, also have similar free tools. But what if you want a broader, deeper picture of your website’s overall SEO health beyond a single search engine?

Enter Google Webmaster Tools. It’s also 100% free, and can be thought of as a complete SEO health checkup. You can think of Google Webmaster Tools as an accompaniment to GSC helping you to:

- Monitor your site’s SEO health. See where you are at, in terms of technical foundation.

- Discover more than 100 possible SEO issues. Find things that will hold you back.

- Understand your backlink profile. See who is linking to you.

- Monitor a keyword ranking report. Stay aware of where your pages show up on searches.

- Estimate traffic potential for individual pages. See what visits could be possible to gain.

- Find internal linking opportunities. This would make a site restructuring simple.

Google’s Mobile-Friendly Test

Google’s Mobile-Friendly Test measures the ease of use for visitors on your page when using smartphones and tablets. Potential usability pitfalls are identified: for example, small text that cannot be read, plugins that are not supported, or touch elements that are too close to each other.

The test will also show you what Google actually sees when it crawls your page, which could uncover different rendering. To find out how structured data is presented in search results above and beyond the other tests, use the other complimentary Rich Results Test to evaluate both mobile and desktop views.

PageSpeed Insights :

PageSpeed Insights (PSI) is a free tool to analyze how fast your webpages load for visitors. It does not simply provide you with a performance score; it also provides detailed actionable recommendations that can help you improve loading times considerably.

PSI evaluates real users’ data (Field Data) and simulates experiences in a lab (Lab Data), identifying some restraints you may have with large images, render-blocking scripts, or messy code. The suggestions PSI provides are specific and action-oriented, enabling you to optimize assets, use browser caching, and minify code. By doing these things you also help improve user experience and lower bounce rates, while also doing a positive thing for your ranking in search engines. It is an essential tool for improving web performance overall.

Chrome DevTools :

Chrome DevTools is the built-in developer suite of Chrome that is critical for assessing and improving performance on webpages. Use it to troubleshoot issues, enhance loading speed, optimize rendering, and assess network activity.

From a technical SEO perspective, DevTools is an extremely useful tool. It lets you:

- Audit JavaScript rendering for search crawlers

- Find render-blocking resources

- Emulate mobile devices and throttled connections

- Inspect rendered HTML/CSS

- Dig into Core Web Vitals

This uncovers rendering issues and optimizes branch efficiency.

References :

- The Beginner’s Guide to Technical SEO

- TechnicalSEO.com | SEO Tools & Insights

- Technical SEO Fundamentals